GSoC/2021/StatusReports/PhuocKhanhLE: Difference between revisions

| Line 34: | Line 34: | ||

** At last, I determine if a specific pixel is blur nor sharp by using a threshold. (parameter : filtrer_defocus). | ** At last, I determine if a specific pixel is blur nor sharp by using a threshold. (parameter : filtrer_defocus). | ||

* Motion blur detector : | * Motion blur detector : | ||

** At first, I consider only the clear edges from edges map, by using a threshold. (parameter : edges_filtrer). | |||

** Then, the image is divided into small parts with a pre-defined size (parameter : part_size_motion_blur). | |||

** Then, I apply [https://docs.opencv.org/3.4/d9/db0/tutorial_hough_lines.html Hough Line Transform] to determine every line in the part. This transformation uses parameter theta_resolution and threshold_hough to find line in an image. Besides, instead of using class Hough transform (cv::HoughLines() ), I use Probabilistic Hough Line Transform (cv::HoughLinesP). HoughLinesP uses more criteria to determine a line than HoughLines includes : min_line_length. This parameter helps to prevent too short line. | |||

** Having lines of a part, it is easy to calculate the angle of them. As mentioned in the proposal, a small part with many parallel lines is a motion blurred part. The parameter min_nb_lines is used to determine if a part can be motion blur. Then, I check if these lines are parallel. There are some tricks to do it : | |||

*** At first, angle alpha and alpha +/- pi are the same in the term of parallelism. Hence, I limit the angle from 0 to pi radian. | |||

*** Secondly, 2 parallel lines have not necessarily same angle degree, but a very proximate value. Hence, I calculate the standard deviation of angle values and compare it to parameter max_stddev. If it is smaller, then, the part is motion blurred. | |||

*** At last, this trick has a weekness when the direction of motion is horizontal. That means angles are around 0 and pi, so the variance would be very big while the image is motion blurred. My solution is to bound the intervall of angle only from 0 to (pi - pi / 20). It would solve this case but it is not the best solution. | |||

I tested with [https://invent.kde.org/graphics/digikam/-/tree/IQS-blurdetection/core/tests/imgqsort/data test images] in unit test of digikam. These images are some different use-cases for blur detection. For instance, the algoritm can recognize the sharp image like [https://invent.kde.org/graphics/digikam/-/blob/IQS-blurdetection/core/tests/imgqsort/data/blur_rock_1.jpg rock], or even difficult case like [https://invent.kde.org/graphics/digikam/-/blob/IQS-blurdetection/core/tests/imgqsort/data/blur_tree_1.jpg tree]. It is also success to recognize motion blur by cheking on image like [https://invent.kde.org/graphics/digikam/-/blob/IQS-blurdetection/core/tests/imgqsort/data/blur_rock_2.jpg rock_2] or [https://invent.kde.org/graphics/digikam/-/blob/IQS-blurdetection/core/tests/imgqsort/data/blur_tree_2.jpg tree_2]. However, the recognition is not very sensitive to jugde them as rejected but only pending. | I tested with [https://invent.kde.org/graphics/digikam/-/tree/IQS-blurdetection/core/tests/imgqsort/data test images] in unit test of digikam. These images are some different use-cases for blur detection. For instance, the algoritm can recognize the sharp image like [https://invent.kde.org/graphics/digikam/-/blob/IQS-blurdetection/core/tests/imgqsort/data/blur_rock_1.jpg rock], or even difficult case like [https://invent.kde.org/graphics/digikam/-/blob/IQS-blurdetection/core/tests/imgqsort/data/blur_tree_1.jpg tree]. It is also success to recognize motion blur by cheking on image like [https://invent.kde.org/graphics/digikam/-/blob/IQS-blurdetection/core/tests/imgqsort/data/blur_rock_2.jpg rock_2] or [https://invent.kde.org/graphics/digikam/-/blob/IQS-blurdetection/core/tests/imgqsort/data/blur_tree_2.jpg tree_2]. However, the recognition is not very sensitive to jugde them as rejected but only pending. | ||

Revision as of 21:55, 10 July 2021

Digikam: Faces engine improvements

Image Quality sorter is a tool of digiKam. It helps users to label image by accepted or pending or rejected. However, the current implementation does not have a good performance. My proposal provides my perspective to improve this functionality.

Mentors : Gilles Caulier, Maik Qualmann, Thanh Trung Dinh

Community Bonding

Community Bonding is the for time for preparation. I spend my first post to describe this important period. Thanks to mentors Gilles Caulier, Maik Qualmann and Thanh Trung Dinh for helping me prepare for the work this summer.

I have spent these 3 weeks reading the huge codebase of digiKam, preparing environment for coding, and study in depth the algorithm that I am about to implement. This is the branch that will have my work for GSoC'21.

After reading enough documentation and mathematic, I start preparing a base for testing each functionality of Image Quality Sorter (IQS). I found that it is the most important task in this period. This helps me having a tool to evaluate my future work without thinking further how to test it. There are 2 types of unit test : unit test for each factor (for example : detectionblur) and unit test by pre-defined use-case.

Although these tests can not be passed now, it will be useful in the future.

Blur detection (first week : June 07 - June 14)

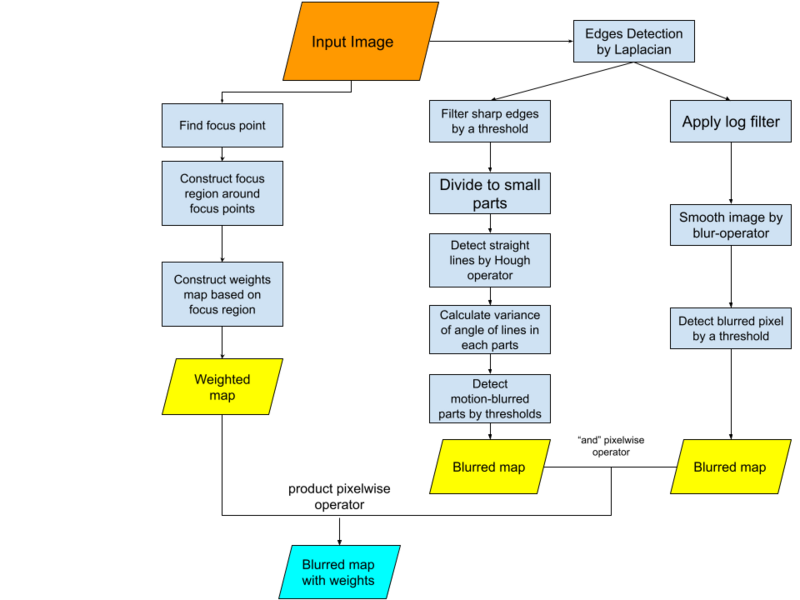

The figure below designs my algorithm.

As discused in the proposal, there are 2 types of blur : motion blur and defocused blur. Hence, there should be detectors for each one due to the difference between their nature. Defocused blur appears when the edges of object are fuzzy. While motion blur appears when the object or the camera moves when capturing.

As observation, I found that defocused blur is the disappearance of edges, while motion blur is the appearance of many parallel edges in a small part of image. Based on algoritm of edges detection Laplacian of opencv, I extract an edges map of the image. I used this map tp detect defocused blur and motion blur of the image.

The avantage of Laplacian is its sentivity. It detects not only main edges but also the small edges inside the object. So, I can distinguish sharp region belonged to the object and blurred region of background.

- Defocused detector :

- This edges map is not enough to detect defocused blur. In order to distinguish sharp pixel of edges and blurred pixel, I apply an log filter. (parameter : ordre_log_filtrer).

- Then, I smooth image by the operateur blur and medianblur of open cv to get a blurred map.

- At last, I determine if a specific pixel is blur nor sharp by using a threshold. (parameter : filtrer_defocus).

- Motion blur detector :

- At first, I consider only the clear edges from edges map, by using a threshold. (parameter : edges_filtrer).

- Then, the image is divided into small parts with a pre-defined size (parameter : part_size_motion_blur).

- Then, I apply Hough Line Transform to determine every line in the part. This transformation uses parameter theta_resolution and threshold_hough to find line in an image. Besides, instead of using class Hough transform (cv::HoughLines() ), I use Probabilistic Hough Line Transform (cv::HoughLinesP). HoughLinesP uses more criteria to determine a line than HoughLines includes : min_line_length. This parameter helps to prevent too short line.

- Having lines of a part, it is easy to calculate the angle of them. As mentioned in the proposal, a small part with many parallel lines is a motion blurred part. The parameter min_nb_lines is used to determine if a part can be motion blur. Then, I check if these lines are parallel. There are some tricks to do it :

*** At first, angle alpha and alpha +/- pi are the same in the term of parallelism. Hence, I limit the angle from 0 to pi radian. *** Secondly, 2 parallel lines have not necessarily same angle degree, but a very proximate value. Hence, I calculate the standard deviation of angle values and compare it to parameter max_stddev. If it is smaller, then, the part is motion blurred. *** At last, this trick has a weekness when the direction of motion is horizontal. That means angles are around 0 and pi, so the variance would be very big while the image is motion blurred. My solution is to bound the intervall of angle only from 0 to (pi - pi / 20). It would solve this case but it is not the best solution.

I tested with test images in unit test of digikam. These images are some different use-cases for blur detection. For instance, the algoritm can recognize the sharp image like rock, or even difficult case like tree. It is also success to recognize motion blur by cheking on image like rock_2 or tree_2. However, the recognition is not very sensitive to jugde them as rejected but only pending.

However, there are still some hard situations. Image [street]( ) is easy to be recognized as motion blurred because of it nature. Image [sky](

) is easy to be recognized as motion blurred because of it nature. Image [sky]( ) is recognized as defocused blur because of its part sky and cloud.

) is recognized as defocused blur because of its part sky and cloud.

The project should cover also the artistic image which focus on some specific position and blurs the rest on purpose . It demands an implementation of focus region extractor based on metadata. Moreover, as mentioned above, there is a dead case for the algorithm when the image have background (sky, wall, water, ...). It is resonable because bachground doesn't have edges to detect. It also can not be seen as an blurred object. I will try to recognize this part in the next week.

Focuspoint extraction and completion of blur detection (second week : June 14 - June 21)

Camera can use some points (name auto focus points) on image to make the region around sharper. Besides, it blurs the futher region, in order to make an aristic product. These points are named af points. An af point can be pre-defined by camera or by user, depend on model of camera. There are only 2 cases for af points : infocused and selected. Infocues points are the points sharpenned by camera, when selected points is the points expected to be clear by user.

An point can be both in 2 cases (infocus-selected) or neither both (inactive).

Being inspired by the plugins of [focus points](https://github.com/musselwhizzle/Focus-Points) from LightRoom, I implemented the class FocusPointExractor in the library of metaengines of digiKam. By using exiftool which has been alread deployed in version 7.2 of digiKam, I can easily extract the auto focus infomation (af point). However, there is no standardized document. Therefore, each model of camera has its own function of extracting af points.

Currently, there are 4 models of camera which are extractable for af points : - Nikon - Canon - Sony - Panasonic

Each point is not only a point on the image but a rectangle. Therefore, there are 4 properties : coordinates of center of the rectangle, width, and height. At last, the type of point is the fifth property.

FocusPoint is used to recognized focus region of image. This is a region where user expected to be clear. Therefore, I extract only selected points. For each point, I define focus region is the region around and propotional to the rectangle of point. I consider only pixels within this region for blur detection.

As mentioned in the last week, the algorithm should not consider the region of background, even in the image without focus point. By observation, these regions often have mono-color. Therefore, I detect them by dividing image small part, and calculate the standard deviation of color in this path. If it is smaller than a threshold (parameter : mono_color_threshold), I judge it a part of background and no longer consider it. By this adjustment, the figure below designs the last version of my algorithm.

<img

id="blur_detection_overall idea" src="/images/blur_detection_algo_2.png" alt="blur_detection_algo_2">

The main problem now is fine tunning the parameter. If I set ordre_log_filtrer and filtrer_defocus too high, the sharp image can be judged pending. Though, If I set them too small, the blurred one can be judged pending.