GSoC/2019/StatusReports/ThanhTrungDinh: Difference between revisions

| (25 intermediate revisions by the same user not shown) | |||

| Line 10: | Line 10: | ||

** detect faces across various scales (e.g. big, small, etc.), with occlusion (e.g. sunglasses, scarf, mask etc.), with different orientations (e.g. up, down, left, right, side-face etc.) | ** detect faces across various scales (e.g. big, small, etc.), with occlusion (e.g. sunglasses, scarf, mask etc.), with different orientations (e.g. up, down, left, right, side-face etc.) | ||

'''Mentors''' : Maik Qualmann, Gilles Caulier, Stefan Müller, Marc Palaus | |||

<br> | |||

[[File:intro_facerec2.gif]] | |||

<br> | |||

== Important Links == | == Important Links == | ||

=== Proposal === | === Proposal === | ||

[https://drive.google.com/open?id=1PeWZIeR3JcgrN5QqbpkYSH8bcZsblMRJ Project Proposal] | [https://drive.google.com/open?id=1PeWZIeR3JcgrN5QqbpkYSH8bcZsblMRJ Project Proposal] | ||

| Line 35: | Line 38: | ||

** [https://invent.kde.org/kde/digikam/commit/0be666f51d9342259b4f7fdbbf04302f94180dce Clustering with k-means] | ** [https://invent.kde.org/kde/digikam/commit/0be666f51d9342259b4f7fdbbf04302f94180dce Clustering with k-means] | ||

** [https://invent.kde.org/kde/digikam/commit/a2cc416487d1e1ece3724c820a025c3b21b4b0f7 Clustering with DBSCAN] | ** [https://invent.kde.org/kde/digikam/commit/a2cc416487d1e1ece3724c820a025c3b21b4b0f7 Clustering with DBSCAN] | ||

<br> | |||

== Contacts == | |||

'''Email''': [email protected] | |||

'''Github''': TrungDinhT | |||

'''LinkedIn''': https://www.linkedin.com/in/thanhtrungdinh/ | |||

<br> | |||

== Work report == | == Work report == | ||

| Line 47: | Line 60: | ||

* Start to implement chosen NN model with OpenCV DNN | * Start to implement chosen NN model with OpenCV DNN | ||

<br> | |||

=== Coding period : Phase one (May 28 to June 23) === | === Coding period : Phase one (May 28 to June 23) === | ||

For this phase, my work mostly concentrated on building the "first and dirty" but working prototype of face recognition with OpenCV DNN. In addition, throughout that very first draft, points that need to be improved were revealed, so as to build a better and faster face recognition module. | For this phase, my work mostly concentrated on building the "first and dirty" but working prototype of face recognition with OpenCV DNN. In addition, throughout that very first draft, points that need to be improved were revealed, so as to build a better and faster face recognition module. | ||

| Line 72: | Line 86: | ||

Indeed, talking about the potential of the implementation with OpenCV DNN, I was able to identify the points whose solution is going to improve: | Indeed, talking about the potential of the implementation with OpenCV DNN, I was able to identify the points whose solution is going to improve: | ||

* | * Accuracy: | ||

** It was on prediction phase when euclidean distance between 128-D vectors was used as a metric to evaluate whether a face is "similar" to another face or not. However, other types of distance (e.g. cosine similarity) more suitable for unnormalized vectors may return better results. | ** It was on prediction phase when euclidean distance between 128-D vectors was used as a metric to evaluate whether a face is "similar" to another face or not. However, other types of distance (e.g. cosine similarity) more suitable for unnormalized vectors may return better results. | ||

** More suitable face alignment is promising for better recognition accuracy. OpenFace github has [https://github.com/cmusatyalab/openface/blob/master/openface/align_dlib.py python script] on how faces should be aligned for the best accuracy achieved by output of the neural network | ** More suitable face alignment is promising for better recognition accuracy. OpenFace github has [https://github.com/cmusatyalab/openface/blob/master/openface/align_dlib.py python script] on how faces should be aligned for the best accuracy achieved by output of the neural network | ||

* | * Speed: | ||

** file containing the data to compute face landmarks (useful for aligning face before recognition) is loaded every time a face is recognized. This can be easily eliminated by loading and storing the data on memory. In addition, detection is conducted twice on face, one by OpenCV Haar Cascade face detection and other internally by Dlib. All of them are unnecessary and take a lot of time to finish. | ** file containing the data to compute face landmarks (useful for aligning face before recognition) is loaded every time a face is recognized. This can be easily eliminated by loading and storing the data on memory. In addition, detection is conducted twice on face, one by OpenCV Haar Cascade face detection and other internally by Dlib. All of them are unnecessary and take a lot of time to finish. | ||

* | * Modularity: | ||

** different NN models require different kinds of preprocessing for input images (e.g. appropriate face alignment in case of OpenFace). Therefore, an abstraction needs to be implemented to allow possible future use of other NN models. | ** different NN models require different kinds of preprocessing for input images (e.g. appropriate face alignment in case of OpenFace). Therefore, an abstraction needs to be implemented to allow possible future use of other NN models. | ||

<br> | <br> | ||

| Line 90: | Line 104: | ||

<br> | <br> | ||

'''TODO''' | '''TODO''' | ||

* Abstraction layer for other NN models to be implemented | * Abstraction layer for other NN models to be implemented. | ||

* Implement new face detection | * Implement new face detection. | ||

* Optimize face prediction and investigate face clustering | * Optimize face prediction and investigate face clustering. | ||

<br> | <br> | ||

Following the analysis result reported for the last 2 weeks, I modified the implementation in a way that all data files needed for recognition are loaded at the launching time (in | Following the analysis result reported for the last 2 weeks, I modified the implementation in a way that all data files needed for recognition are loaded at the launching time (in constructors), which saves a lot of time. It shows an increase of 10x in speed, since it turns out that loading data takes 90% of processing time. | ||

| Line 101: | Line 115: | ||

Since new implementation of face recognition has achieved some | Since new implementation of face recognition has achieved some promising results, I intend to concentrate on optimization for the next coding phases: | ||

* Codes should be factored and | * Codes should be factored and restructured so as to allow possible implementations of different NN models with OpenCV DNN, which will require different preprocessing. | ||

* Face detection with SSD-MobileNet should be implemented into facesengine and tested | * Face detection with SSD-MobileNet should be implemented into facesengine and tested. | ||

* Face prediction should be optimized. Indeed, the current way to predict a tag for a new face is based on finding the closest face to that faces, and then the tag of that closest face will be assigned to the new face. Intuitively, it's not a good solution. As a result, a way to determine whether a face belongs to a group of faces should be investigated, alongside with face clustering. | * Face prediction should be optimized. Indeed, the current way to predict a tag for a new face is based on finding the closest face to that faces, and then the tag of that closest face will be assigned to the new face. Intuitively, it's not a good solution. As a result, a way to determine whether a face belongs to a group of faces should be investigated, alongside with face clustering. | ||

<br> | |||

=== Coding period : Phase two (June 24 to July 21) === | === Coding period : Phase two (June 24 to July 21) === | ||

| Line 113: | Line 129: | ||

<br> | <br> | ||

'''DONE''' | '''DONE''' | ||

* Restructuring face recognition codes and isolating codes for preprocessing input of OpenFace NN model | * Restructuring face recognition codes and isolating codes for preprocessing input of OpenFace NN model. | ||

'''TODO''' | '''TODO''' | ||

* Implement new face detection with OpenCV DNN and SSD-MobileNet model | * Implement new face detection with OpenCV DNN and SSD-MobileNet model. | ||

<br> | <br> | ||

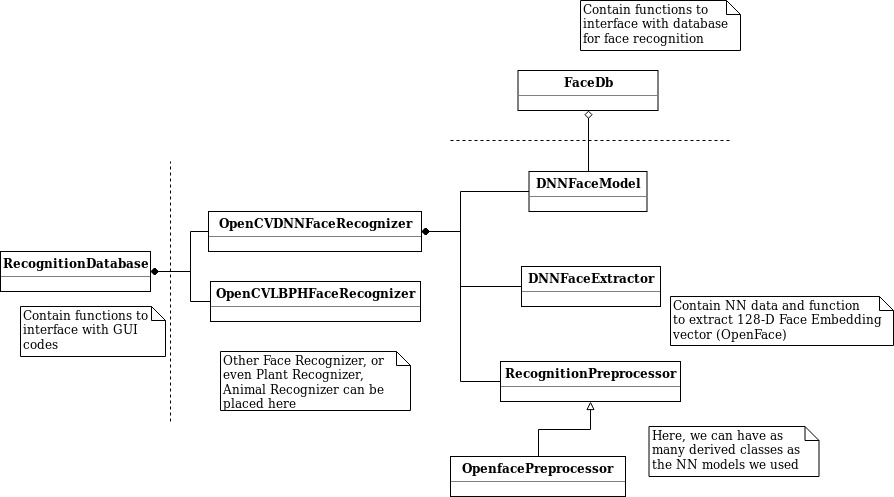

Simplified UML for face recognition code factoring | Simplified UML for face recognition code factoring | ||

[[File: | [[File:UML_face_recognition_module.jpg]] | ||

<br> | <br> | ||

<br> | <br> | ||

| Line 129: | Line 145: | ||

Discussing with my mentors and others digiKam contributors about face recognition, we were all agree that face detection is one of the key | Discussing with my mentors and others digiKam contributors about face recognition, we were all agree that face detection is one of the key factors to improve face recognition in dk. Hence the next step of my work during this phase should tackle face detection. | ||

<br> | <br> | ||

| Line 136: | Line 152: | ||

<br> | <br> | ||

'''DONE''' | '''DONE''' | ||

* New face detection implementation with OpenCV DNN and | * New face detection implementation with OpenCV DNN and SSD-MobileNet pretrained model. | ||

'''TODO''' | '''TODO''' | ||

* Improve face detection for not square (or not near-square) images | * Improve face detection for not square (or not near-square) images. | ||

* Improve face recognition with distance measure to face groups and implement face clustering | * Improve face recognition with distance measure to face groups and implement face clustering. | ||

<br> | <br> | ||

At the moment of | At the moment of publishing, [https://arxiv.org/abs/1512.02325 SSD] (Single Shot Multibox Detector) is the state-of-the-art (SOTA) algorithm for single shot face detection, while achieved comparable results with [https://arxiv.org/abs/1504.08083 Fast R-CNN] (current SOTA algorithm) and a real-time performance at over 30 fps on CPU. SSD-MobileNet for OpenCV DNN is a pretrained model based on SSD and [https://arxiv.org/abs/1704.04861 MobileNet] architecture and can be found in the corresponding [https://github.com/opencv/opencv/tree/master/samples/dnn/face_detector github folder] of OpenCV. The customized pretrained model is lightweight and specially fits for OpenCV DNN. | ||

| Line 156: | Line 172: | ||

Besides, for the last phase of GSoC, I will | Besides, for the last phase of GSoC, I will concentrate my work on face recognition improvement and face clustering. | ||

<br> | |||

=== Coding period: Phase three (July 22 to August 26) === | |||

For this last phase of GSoC, I dedicated my work on optimizing the face detection and face recognition, while finishing my last TODOs on face clustering. | |||

<br> | |||

==== July 22 to August 11 (Week 9-11) - Face detection improvements, YOLOv3, k-means clustering ==== | |||

<br> | |||

'''DONE''' | |||

* Face detection improvements on non-square images. | |||

* Study and implement new face detection based on OpenCV DNN with YOLOv3 pretrained model. | |||

* Implement k-means clustering for faces. | |||

'''TODO''' | |||

* Try other algorithms for face clustering. | |||

* Turn back to face recognition optimization. | |||

<br> | |||

Basing on my observation (i.e. face detection did not work well on rectangle image with w:h ratio much smaller or much bigger than 1.0), I found a way to improve significantly the accuracy of face detection. The idea is to resize the image while keeping its aspect ratio (in a way such that the resizing proportion is as least as possible), then pad the image so that it becomes square and reaches the required dimension. Since faces in the image are only bigger but not deformed, they are easily detected. | |||

In addition, I also discovered that SSD-MobileNet-based face detection did not work well with photos having many faces, low-resolution photos, or landscape photos with people that are too small in comparison to other objects (e.g. river, mountain, trees, etc.). All of those cases indicate that SSD-MobileNet cannot detect faces when the proportion of face_size/photo_size is too small. Hence, I studied another NN model, which is [https://pjreddie.com/media/files/papers/YOLOv3.pdf YOLOv3]. When implementing face detection with that model, I achieved outperforming result comparing with SSD-MobileNet. | |||

The image below shows a comparison of face detection with SSD-MobileNet and with YOLOv3 | |||

[[File:face_detection_comparison.jpg|center]] | |||

Even though it runs 10 times slower (i.e. 400 - 800ms for each image), it detects faces much more accurately. From my point of view, bounding boxes detected for faces (by face detection) take a significant amount of time if users want to modify them. Therefore, an accurate face detection algorithm should be preferred over a fast one. That's why I set YOLOv3 as default NN model to use for face detection over SSD-MobileNet. | |||

Back to face recognition, I attempted to implement face clustering. The ideas is to cluster unknown faces into groups, helping user to tag faces more easily. However, k-means implementation requires the number of clusters in advance, which is absurd in our case as we needs to cluster first to know how many clusters there are. As a result, better clustering algorithms should be studied later, as well as optimization on face recognition. | |||

==== August 12 to August 18 (Week 12) - Face recognition optimization and DBSCAN face clustering ==== | |||

<br> | |||

'''DONE''' | |||

* Face clustering with DBSCAN implemented. | |||

* Distance measurement between a face and a group of faces implemented. | |||

'''TODO''' | |||

* Face recognition UI clean up. | |||

* Documentation and final report | |||

<br> | |||

Face recognition optimization on distance measurement between a face and a group of faces has been abandoned for more than a month. Focusing on that for this week, I finally implemented the distance measurement as the average of distance to all the faces in the group. It is expected that face prediction will be now more robust. | |||

[https://www.aaai.org/Papers/KDD/1996/KDD96-037.pdf Density-Based Spatial Clustering of Applications with Noise] (DBSCAN) was implemented for face clustering. It was selected since it does not require the number of clusters like k-means. However, an eps value (determining how we can consider 2 faces as being similar) and minPts (for minimum faces per cluster) should be given as parameter of the algorithm. The algorithm works quite well, but extremely sensitive to eps value. Indeed, human faces are quite similar one to another, so the "noise" is strong, and DBSCAN is not quite good in that situation. | |||

For the week of work submission, things left to do are cleaning the codes and face recognition UI to replace old face recognition based on LPBH algorithm, documenting the codes and finishing the final report. | |||

<br> | |||

=== Future work === | |||

There are still many things to improve on digiKam face recognition performance and the accuracy of face clustering, as well as on the code base side. From my point of view, those improvements should be: | |||

* The 128-D face embedding vector of each face should be computed only once, and when modified. This will save user a lot time waiting for the vector to be recomputed each time face recognition performed. | |||

* New face clustering algorithms implemented. A candidate can be [https://ieeexplore.ieee.org/document/8359265 Density-Based Multiscale Analysis for Clustering in Strong Noise Settings With Varying Densities] (DBMAC/DBMAC-II). | |||

* Dead codes and unused codes of old face recognition algorithms (e.g. LPBH, FisherFace, EigenFace) should be cleaned up. | |||

Latest revision as of 07:46, 4 September 2019

digiKam AI Face Recognition with OpenCV DNN module

digiKam is KDE desktop application for photos management. For a long time, digiKam team has put a lot of efforts to develop face engine, a feature allowing to scan user photos and suggest face tags automatically basing on pre-tagged faces by users. However, that functionality is currently deactivated in digiKam, as it is slow while not adequately accurate. Thus, this project aims to improve the performance and accuracy of facial recognition in digiKam by exploiting state-of-the-art neural network models in AI and machine learning, combining with highly-optimized OpenCV DNN module.

The project includes 2 main parts:

- Improve face recognition: implementation with OpenCV DNN module

- reduce processing time while keeping high accuracy

- classify unknown faces into classes of similar faces

- Improve face detection: implementation to be investigated

- detect faces across various scales (e.g. big, small, etc.), with occlusion (e.g. sunglasses, scarf, mask etc.), with different orientations (e.g. up, down, left, right, side-face etc.)

Mentors : Maik Qualmann, Gilles Caulier, Stefan Müller, Marc Palaus

Important Links

Proposal

Git dev branch

Important commits

- Face Recognition:

- Face Detection:

- Face workflow:

Contacts

Email: [email protected]

Github: TrungDinhT

LinkedIn: https://www.linkedin.com/in/thanhtrungdinh/

Work report

Bonding period (May 6 to May 27)

Generally, I familiarized myself with current Deep Learning (DL) based approach for face recognition in digiKam and picked up the work of Yingjie Liu (digiKam GSoC 2017). His proposal, blog posts and status report led me to FaceNet paper and OpenFace - an opensource implementation of neural network (NN) model inspired by FaceNet paper. They seemed very potential to my work. In addition, Liu also implemented unit tests on his DL implementation and their results (benchmark and accuracy), which can be used as a reference for my work later.

For the rest of the bonding period, I focused on reading carefully FaceNet paper and also looking for other promising NN models to implement when coding period begins. Besides, I also prepared unit tests for face recognition, basing on current test programs and Liu's work.

My plan for next 2 weeks of coding period is to:

- Finish NN model selection

- Finish unit tests

- Start to implement chosen NN model with OpenCV DNN

Coding period : Phase one (May 28 to June 23)

For this phase, my work mostly concentrated on building the "first and dirty" but working prototype of face recognition with OpenCV DNN. In addition, throughout that very first draft, points that need to be improved were revealed, so as to build a better and faster face recognition module.

May 28 to June 11 (Week 1 - 2) - Face Recognition got an 8x speed up

DONE

- NN model selection -> OpenFace pretrained model.

- First draft working implementation of face recognition with OpenCV DNN and OpenFace pretrained model.

- Unit test for evaluation and benchmarking new implementation (time and accuracy).

- Tested and compared new implementation with Liu's work basing on Dlib, for performance and accuracy.

TODO

- Improve performance (i.e. speed) and accuracy of new implementation.

- Investigate the effect of better face detection on face recognition accuracy.

OpenFace model is chosen since it is appropriate for application like digiKam. OpenFace is a pretrained based on FaceNet paper, taking a cropped, aligned face as input and resulting a 128-D vector representing that face. The vector can be used later to compute similarity to pretagged faces, or clustered into groups of similar faces. For photo management software as digiKam, user has an "open" face database, where an unlimited number of faces and people can be added. Therefore, the face recognition should be flexible enough for extension, while having a decent accuracy. However, false positives are not so critical, since they can be always corrected by user. From all of the reason above, OpenFace is a good choice.

Liu's implementation is based on a version of OpenFace pretrained model, customized for compatibility with Dlib. It achieves an astonishing accuracy on orl database with above 98% of accuracy for tag prediction on faces with only 20% of pretagged images. However, its drawback is the performance. Face recognition on 112x92 images took on average 8s for a face. In case that the image is bigger, performance is worse.

My attempt on a new implementation with OpenCV DNN has shown great potential. While not being able to reach the accuracy of Liu's work, it runs much faster. For my first draft, the processing time for each image is only about 1.3s, while an accuracy of above 80% for 20% of pretagged images was achieved.

Indeed, talking about the potential of the implementation with OpenCV DNN, I was able to identify the points whose solution is going to improve:

- Accuracy:

- It was on prediction phase when euclidean distance between 128-D vectors was used as a metric to evaluate whether a face is "similar" to another face or not. However, other types of distance (e.g. cosine similarity) more suitable for unnormalized vectors may return better results.

- More suitable face alignment is promising for better recognition accuracy. OpenFace github has python script on how faces should be aligned for the best accuracy achieved by output of the neural network

- Speed:

- file containing the data to compute face landmarks (useful for aligning face before recognition) is loaded every time a face is recognized. This can be easily eliminated by loading and storing the data on memory. In addition, detection is conducted twice on face, one by OpenCV Haar Cascade face detection and other internally by Dlib. All of them are unnecessary and take a lot of time to finish.

- Modularity:

- different NN models require different kinds of preprocessing for input images (e.g. appropriate face alignment in case of OpenFace). Therefore, an abstraction needs to be implemented to allow possible future use of other NN models.

June 11 to June 23 (Week 3 - 4) - 90% accuracy and 100x speed up on face recognition

DONE

- Speed up to 80ms of processing time for each face, which is 10x faster than the last implementation and 100x faster than current implementation.

- Accuracy improvement to 90% for 20% pretagged faces.

- Tests (with python) better face detection improve accuracy up to 96% for 20% pretagged faces.

(All tests were conducted on orl database)

TODO

- Abstraction layer for other NN models to be implemented.

- Implement new face detection.

- Optimize face prediction and investigate face clustering.

Following the analysis result reported for the last 2 weeks, I modified the implementation in a way that all data files needed for recognition are loaded at the launching time (in constructors), which saves a lot of time. It shows an increase of 10x in speed, since it turns out that loading data takes 90% of processing time.

For accuracy improvement, cosine similarity was implemented. It's obvious that those 128-D vectors are not normalized, so cosine distance works better than euclidean distance. Besides, I wrote python scripts to test new face detection approach with SSD-MobileNet NN model and OpenCV DNN. It shown a possible accuracy of above 96% for 20% pretagged images, which is extremely promising.

Since new implementation of face recognition has achieved some promising results, I intend to concentrate on optimization for the next coding phases:

- Codes should be factored and restructured so as to allow possible implementations of different NN models with OpenCV DNN, which will require different preprocessing.

- Face detection with SSD-MobileNet should be implemented into facesengine and tested.

- Face prediction should be optimized. Indeed, the current way to predict a tag for a new face is based on finding the closest face to that faces, and then the tag of that closest face will be assigned to the new face. Intuitively, it's not a good solution. As a result, a way to determine whether a face belongs to a group of faces should be investigated, alongside with face clustering.

Coding period : Phase two (June 24 to July 21)

From the results and analysis of the last phase, I mostly concentrated on factoring codes of face recognition for this phase. This allowed for an abstraction layer where more NN models can be implemented while sharing a common interface. This gives places for flexibility and extension for later development on face recognition. In addition, I also accomplish a working version for face detection with OpenCV DNN and SSD-MobileNet, which outperforms current face detection.

June 24 to July 07 (Week 5 - 6) - Face Recognition codes factoring for modularity

DONE

- Restructuring face recognition codes and isolating codes for preprocessing input of OpenFace NN model.

TODO

- Implement new face detection with OpenCV DNN and SSD-MobileNet model.

Simplified UML for face recognition code factoring

The motivation for restructuring face recognition codes stems from the fact that different NN models need different preprocessing techniques. Therefore, the codes to load NN model with its own preprocessing should be isolated. Hence, restructuring face recognition during this phase will facilitate significantly my work if I want to test different NN models later.

Besides, I delete the old codes of Liu using dlib, since after restructuring the codes, there are no places using dlib codes anymore. This is indeed a goal of my GSoC project this year, because this reduces efforts for maintenance, as well as eliminates dlib dependencies, compiler warning and complicated rules for compiler when compiling dlib.

Discussing with my mentors and others digiKam contributors about face recognition, we were all agree that face detection is one of the key factors to improve face recognition in dk. Hence the next step of my work during this phase should tackle face detection.

July 08 to July 21 (Week 7 - 8) - Face Detection achieves 100% accuracy on orl test set

DONE

- New face detection implementation with OpenCV DNN and SSD-MobileNet pretrained model.

TODO

- Improve face detection for not square (or not near-square) images.

- Improve face recognition with distance measure to face groups and implement face clustering.

At the moment of publishing, SSD (Single Shot Multibox Detector) is the state-of-the-art (SOTA) algorithm for single shot face detection, while achieved comparable results with Fast R-CNN (current SOTA algorithm) and a real-time performance at over 30 fps on CPU. SSD-MobileNet for OpenCV DNN is a pretrained model based on SSD and MobileNet architecture and can be found in the corresponding github folder of OpenCV. The customized pretrained model is lightweight and specially fits for OpenCV DNN.

New face detection with OpenCV DNN and SSD-MobileNet got a very good result. While running a little bit slower than OpenCV Haar face detector, it maintains real-time detection at around 30 fps running on CPU. On orl test set, OpenCV DNN face detector outperforms OpenCV Haar with 100% faces detected in comparing with around 90% in case of OpenCV Haar. It can also detect face in photos with complicated light condition, shadow or non-frontal faces. The bounding boxes generated are also better, since they are at various shapes and exactly fit to detected faces. A comparison of OpenCV DNN with OpenCV Haar can be found below:

Credit: Vikas Gupta (Face Detection – OpenCV, Dlib and Deep Learning ( C++ / Python ))

Despite having a very good performance and accuracy, OpenCV DNN face detector seems to work not well with rectangle photos, where the width / height ratio is far from 1.0 (i.e. square). Actually, faces are not detected or detected at the wrong places. Therefore, OpenCV DNN implementation must be studied more.

Besides, for the last phase of GSoC, I will concentrate my work on face recognition improvement and face clustering.

Coding period: Phase three (July 22 to August 26)

For this last phase of GSoC, I dedicated my work on optimizing the face detection and face recognition, while finishing my last TODOs on face clustering.

July 22 to August 11 (Week 9-11) - Face detection improvements, YOLOv3, k-means clustering

DONE

- Face detection improvements on non-square images.

- Study and implement new face detection based on OpenCV DNN with YOLOv3 pretrained model.

- Implement k-means clustering for faces.

TODO

- Try other algorithms for face clustering.

- Turn back to face recognition optimization.

Basing on my observation (i.e. face detection did not work well on rectangle image with w:h ratio much smaller or much bigger than 1.0), I found a way to improve significantly the accuracy of face detection. The idea is to resize the image while keeping its aspect ratio (in a way such that the resizing proportion is as least as possible), then pad the image so that it becomes square and reaches the required dimension. Since faces in the image are only bigger but not deformed, they are easily detected.

In addition, I also discovered that SSD-MobileNet-based face detection did not work well with photos having many faces, low-resolution photos, or landscape photos with people that are too small in comparison to other objects (e.g. river, mountain, trees, etc.). All of those cases indicate that SSD-MobileNet cannot detect faces when the proportion of face_size/photo_size is too small. Hence, I studied another NN model, which is YOLOv3. When implementing face detection with that model, I achieved outperforming result comparing with SSD-MobileNet.

The image below shows a comparison of face detection with SSD-MobileNet and with YOLOv3

Even though it runs 10 times slower (i.e. 400 - 800ms for each image), it detects faces much more accurately. From my point of view, bounding boxes detected for faces (by face detection) take a significant amount of time if users want to modify them. Therefore, an accurate face detection algorithm should be preferred over a fast one. That's why I set YOLOv3 as default NN model to use for face detection over SSD-MobileNet.

Back to face recognition, I attempted to implement face clustering. The ideas is to cluster unknown faces into groups, helping user to tag faces more easily. However, k-means implementation requires the number of clusters in advance, which is absurd in our case as we needs to cluster first to know how many clusters there are. As a result, better clustering algorithms should be studied later, as well as optimization on face recognition.

August 12 to August 18 (Week 12) - Face recognition optimization and DBSCAN face clustering

DONE

- Face clustering with DBSCAN implemented.

- Distance measurement between a face and a group of faces implemented.

TODO

- Face recognition UI clean up.

- Documentation and final report

Face recognition optimization on distance measurement between a face and a group of faces has been abandoned for more than a month. Focusing on that for this week, I finally implemented the distance measurement as the average of distance to all the faces in the group. It is expected that face prediction will be now more robust.

Density-Based Spatial Clustering of Applications with Noise (DBSCAN) was implemented for face clustering. It was selected since it does not require the number of clusters like k-means. However, an eps value (determining how we can consider 2 faces as being similar) and minPts (for minimum faces per cluster) should be given as parameter of the algorithm. The algorithm works quite well, but extremely sensitive to eps value. Indeed, human faces are quite similar one to another, so the "noise" is strong, and DBSCAN is not quite good in that situation.

For the week of work submission, things left to do are cleaning the codes and face recognition UI to replace old face recognition based on LPBH algorithm, documenting the codes and finishing the final report.

Future work

There are still many things to improve on digiKam face recognition performance and the accuracy of face clustering, as well as on the code base side. From my point of view, those improvements should be:

- The 128-D face embedding vector of each face should be computed only once, and when modified. This will save user a lot time waiting for the vector to be recomputed each time face recognition performed.

- New face clustering algorithms implemented. A candidate can be Density-Based Multiscale Analysis for Clustering in Strong Noise Settings With Varying Densities (DBMAC/DBMAC-II).

- Dead codes and unused codes of old face recognition algorithms (e.g. LPBH, FisherFace, EigenFace) should be cleaned up.