GSoC/2020/StatusReports/NghiaDuong: Difference between revisions

No edit summary |

|||

| (140 intermediate revisions by the same user not shown) | |||

| Line 9: | Line 9: | ||

==== Project Proposal ==== | ==== Project Proposal ==== | ||

[https:// | [https://summerofcode.withgoogle.com/serve/5988872632139776/ Digikam DNN based Faces Recognition Improvements] | ||

==== GitLab development branch ==== | ==== GitLab development branch ==== | ||

[https://invent.kde.org/graphics/digikam/-/tree/gsoc20-facesengine-recognition gsoc20-facesengine-recognition] | [https://invent.kde.org/graphics/digikam/-/tree/gsoc20-facesengine-recognition gsoc20-facesengine-recognition] | ||

== Contacts == | |||

'''Email''': [email protected] | |||

'''Github''': [https://github.com/MinhNghiaD MinhNghiaD] | |||

'''LinkedIn''': https://www.linkedin.com/in/nghia-duong-2b5bbb15a/ | |||

<br> | |||

== Project Goals == | |||

The current goals of this project are to : | |||

* Improve the accuracy of faces classifier | |||

* Optimize the use of memory of faces engine | |||

* Decrease storage space of faces engine | |||

* Improve processing speed | |||

* Re-structure faces engine architecture | |||

* Port faces engines to Plugin architecture | |||

== Project Report == | |||

=== Community Bonding period (May 1 to May 31) === | |||

During this period, my main objective was to familiarize myself with [[GSoC/2019/StatusReports/ThanhTrungDinh|the work of Thanh Trung Dinh]], in order to evaluate the current implementation. After going through Thanh Trung Dinh's codes and final report, I have a better understanding of the current implementation of digiKam's faces engine. Generally, the architecture of DNN faces engine can be divided into 3 main parts: | |||

* '''Face detector''' is in charge with face detection. This module gives users the option to choose between 2 prominent face detection algorithms: YOLOv3 and SSD-MobileNet. The faces detected shall be cropped then passed to Face recognizer. | |||

* '''Face recognizer''' is in charge with face recognition process. It receives cropped face from the Face detector and applies face alignment then passed the preprocessed face image through the neural network. After GSoC 2019, the CNN algorithm used by digiKam is OpenFacev1 - an implementation of [[https://arxiv.org/abs/1503.03832| FaceNet paper]]. | |||

* '''Face database''' is in charge of database operations for the storage of functional data of digiKam's faces engine. This is the link between faces engine with digiKam application. | |||

According to bug reports of digiKam's faces engine, the implementation of this module remains some problems that need to be addressed. The main problem reported in several bugs is that the performance of the faces engine decreases with the expansion of the data set. Therefore, for the rest of this period, I aimed to revaluates the exact state of different components of the faces engine. Because the 3 parts of the DNN version of faces engine are fully integrated into digiKam, it is difficult to evaluate the performance of each part without being added up more biases. In order to benchmark each component of the faces engine correctly, I created replicates of digiKam's Face detector and Face recognizer as stand-alone modules that apply the previously implemented DNN algorithms to solve their problems, without any link to digiKam database or digiKam core library. After that, I finally programmed the first sketch of 3 unit tests for Face detector and Face recognizer. | |||

Here is my plan for the first 2 weeks of the coding period is to: | |||

* Complete the unit tests for Face detector and Face recognizer. | |||

* Search for the problems that cause the decrease of performance. | |||

* Try out different methods for face classification. | |||

* Compare the performances of these different techniques. | |||

<br> | |||

=== Coding period : Phase one (June 1 to June 29) === | |||

In this phase, my work mostly concentrated on building the unit tests and applying different classifier methods for Face recognizer. Throughout these tests, points that need to be improved were revealed, so as to improve a better and faster face recognition module. | |||

<br> | |||

===== June 1 to June 14 (Week 1 - 2) - Report of current state of digiKam's faces engine ===== | |||

'''DONE''' | |||

* Unit test with GUI for Face Detector (YOLOv3 and SSD-MobileNet). | |||

* Comparison of performance of YOLOv3 and SSD-MobileNet implementation in digiKam's faces engine. | |||

* Unit test with GUI for Face Recognizer (OpenFacev1). | |||

* Automatic unit test on large datasets to evaluate the performance of Face Recognizer. | |||

* Evaluation of current recognition methods used by the faces engine. | |||

'''TODO''' | |||

* Add and test new recognition methods on Face recognizer in order to improve accuracy and processing speed. | |||

* Compare the performances of these different methods. | |||

* Debug detection errors of SSD-MobileNet. | |||

* Speed up YOLOv3 processing time by using calculation distribution. | |||

During these first weeks of GSoC 2020, I finalized my unit tests for 2 essential components of faces engine: Face detector and Face recognizer. The purpose of these tests is to understand the internal work of faces engine and the reasons for the degradation over time reported in bug reports. | |||

* To verify the functionalities of Face detector, I built a test with GUI, in order to show the image matrices after each step of the face detection process. In this way, we can have a sense of what it is doing and then evaluate its performance. | |||

* To verify the functionalities of Face recognizer, I built 2 tests. A test with GUI to reproduce the face suggesting process. Another test receives a dataset as arguments and split it into a training set and a test set then passes them to the Face recognizer, in order to evaluate its performance. | |||

====== '''- Face Detector status''' ====== | |||

Currently, digiKam's faces engine employs 2 different CNN algorithms for face detection. One is YOLOv3 and the other is SSD-MobileNet. The performances of the implementation of these 2 algorithms are slightly different in digiKam. For each image, YOLOv3 scans 10600 bounding boxes and therefore it gives very high accuracy, but it takes about 400 ms on average, on each image. On another hand, SSD-MobileNet scans only 20 boxes for about 20 ms on each image and it gives a lower accuracy. The default method used by the faces engine is SSD-MobileNet, because of its lightweight and rapidity. | |||

{| | |||

|- | |||

| [[File:Yolo detection.png|thumb|500px|'''Face detection by YOLOv3''']] | |||

||[[File:Ssd detection.png|thumb|500px|'''Face detection by SSD-MobileNet''']] | |||

|} | |||

Although the implementation of SSD-MobileNet performs rather well on average use cases, where all faces are clear and can be easily detected, it still has some limitations that need to be addressed. In the example above, I performed face detection using YOLOv3 and SSD-MobileNet on the same image. In the figure on the left-hand side, the Face detector powered by YOLOv3 can detect most of the faces in the image. However, in the figure on the right-hand side, the Face detector powered by SSD-MobileNet cannot detect any face. Unfortunately, this problem with SSD-MobileNet constantly occurs in several images, usually in cases where the faces are small or the images are too dark. The cause of this low accuracy could be an error in the implementation of SSD-MobileNet in digiKam or an error in the neural network files. Either way, this problem needs to be correct in order to improve the performance of the Face detector. | |||

However, the main scope of this project focuses to improve the Face Recognizer of digiKam. Therefore the works on the Face detector will be postponed to the end of the project. | |||

====== '''- Face Recognizer status''' ====== | |||

After being detected by the Face detector, the face parts of the images are cropped and passed to Face Recognizer. Here, the face image passed through several steps to be recognized. First, the face image is transformed into '''Cv::Mat''', and then scaled into a static ratio defined by Face recognizer. After that, the face is aligned based on the position of eyes, nose, and lip, before being passed through the Neural Network to output a 128-dimensional vector called face embedding. Finally, the output face embedding is compared with registered faces to predict the corresponding identity. | |||

The current classifying method used by digiKam based on cosine distance of face embeddings. The greater the cosine of the angle between 2 vectors, the more similar 2 faces are. In order to predict the label of a face, digiKam's Face recognizer calculates the mean of cosine distance of a face to pre-registered face embeddings of each group of labels. The Face recognizer then picks the highest mean distance that greater a certain threshold as its prediction. | |||

To examine the result of each step, I implemented a unit test with GUI as an extension of the unit test for the Face detector. This test displayed the transformation of face images through the recognition process, and it includes a simple control panel for testers to perform a simple recognition work-flow. This test gives an intuition of what the Recognizer is doing and therefore facilitates debugging processes. | |||

In addition to this test, I implemented a performance test for Face recognizer. This performance test receives a face dataset and a train/test ratio as inputs. The test splits the dataset after the split ratio, the training set will be registered with its labels by the Face recognizer, and the test set will be used to perform the verification of the facial recognition process. The splitting step is completely random to ensure the integrity of the test. With the help of this test, I can evaluate the correct performance (accuracy and speed) of digiKam's Face recognizer. | |||

At first, I applied the performance test on the [http://vision.ucsd.edu/content/yale-face-database Yalefaces dataset], which contains 166 pre-labeled face images. On this small dataset, the performance of the mean cosine distance method is rather well, '''88.8889 % accuracy at speed 75.3333 ms/face, with threshold 0.7 on a total of 121 training faces, and 45 test faces'''. However, when I perform the same test on [http://vision.ucsd.edu/~leekc/ExtYaleDatabase/ExtYaleB.html Extended Yale B data set], which contains 16380 pre-labeled face images, the accuracy shrank to 0 %. To be specific, the accuracy of the mean cosine distance method is '''O % accuracy at speed 626.485 ms/face, with threshold 0.7 on a total of 11469 training faces and 4911 test faces'''. The main reason for this poor accuracy is because it fails to recognized face due to a small mean cosine distance. This problem is the same problem that appeared in several bug reports. | |||

This degradation of Face recognizer dues to the lack of adaptivity of the mean cosine distance method to a big dataset. Because of the nature of this method, when the data related to an entity becomes greater, the dispersion of data makes the mean distance smaller. The calculation of mean cosine distance is exhaustive, its time complexity increases linearly with the size of the data. Therefore, the more data it gets, the poorer performance it is. Furthermore, due to the mathematical nature of the cosine function, the partition capacity of this method is limited. In general, data classification is to find a way to partition data into different groups. Because cos(x) : [0°, 180°] --> [-1,1] is injective, the vectors limited by a cone of 30° is partitioned into the same group, even with a high threshold of 0.86. Therefore, the more labels we have, the more collisions occur between these data partitions. | |||

===== June 15 to June 29 (Week 3 - 4) - 84% accuracy and 104.804 ms/face speed on the Extended Yale B dataset ===== | |||

'''DONE''' | |||

* Apply Machine Learning classifiers on Face recognizer. | |||

* Accuracy improvement from 0% to 84% on the Extended Yale B dataset. | |||

* Processing speed improvement from 671.449 ms/face to 104.804 ms/face on the Extended Yale B dataset. | |||

* High dimensional data partitioning with KD-Tree. | |||

* Implementation of online learning in Face recognizer. | |||

* First sketch of database model for Face recognizer. | |||

'''TODO''' | |||

* Fully integrate new improvements to the faces engine. | |||

* Reorganize the databases of the faces engine. | |||

* Re-verify face aligning process to avoid outlining face embeddings. | |||

* Apply map-reduce to distribute the calculations on multiple threads. | |||

* Port faces engine to plug-in architecture | |||

* Test UMAP Dimensionality reduction algorithm to have an insight into the global structure of face embedding. | |||

As stated in the previous section, the mean cosine distance is not fit for a face classifier. Therefore during these 2 weeks, I focused on implementing new classification methods and compared their performances. | |||

======'''- New face classifiers'''====== | |||

OpenFace trained their convolutional neural network by optimizing the triplet loss of Euclidian distances between face embeddings. This optimization ensures face embeddings belong to the same person have a close distance, and in contrast in the case of 2 different persons. Because of this property, I tried several classification methods that can distinguish vector representations. Here is the list of classification methods that I have tried during this period: | |||

* Closest Cosine distance, | |||

* Closest Euclidian distance, | |||

* Support vector machine with linear kernel, | |||

* Machine Learning K-Nearest neighbors, | |||

* Traditional K-Nearest neighbors with KD-Tree. | |||

{| | |||

|- | |||

| | |||

Cosine distance is the method used by Thanh Trung Dinh in the last Google Summer of Code and Euclidian distance is the method used by OpenFace and FaceNet paper. They both have the same intuition to compare face embeddings based on their relative distances to one another. Based on the principle of triplet loss optimization, the closest face embedding is the most probable match of the labels. These 2 methods search all the registered data to find the closest match and therefore the time and space complexities are linear with the size of the data. | |||

The next 2 classifiers are classical machine learning classifier used for vector classification, and in this case, face embedding classification. Data classification can be solved efficiently by supervised machine learning and it is simple to implement with the help of Machine learning provided by '''OpenCV'''. The registered face embedding and its label are used to optimize the classifier function. Therefore, the more data we have, the classifier becomes more solid. Furthermore, because the classification problem is solved by a classifier function, the processing time is nearly constant. Therefore these machine learning classifiers are highly scalable. | |||

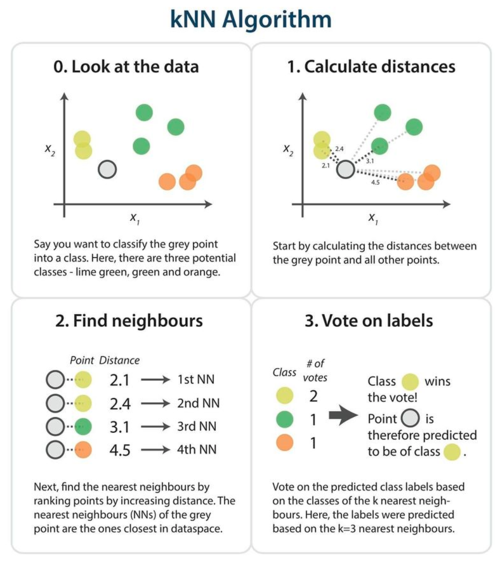

The support vector machine method optimizes its classifier function to distinguish the vectors based on their euclidian distance. This method performs well on the data that is well separated but in the case of digiKam, the data have many outliners that cause some troubles. To solve the problem of outlining data during the classification process, the K-Nearest neighbors method is a feasible solution. The principle of K-Nearest neighbors is to apply a voting system between K-nearest data points, the group of labels has more votes is the most likely prediction. Thanks to the voting mechanism, outlining data doesn't have much influence on the prediction result. | |||

|| [[File:Knearest.png|500px|thumb|center|'''K-Nearest neighbors illustration''']] | |||

|} | |||

After several tests, the Machine K-Nearest neighbors method appears to have superior performance than other methods. However, this method is based on statistics and therefore can have some biases. Therefore I want to try out the traditional K-Nearest neighbors with vote counting mechanism. With the help of KD-Tree, a binary search tree that can partition high dimensional vectors, the search for K-Nearest nodes to a given position becomes more efficient, with the time complexity of '''O(log(n))'''. | |||

======'''- Performances comparison'''====== | |||

After integrated news face classifier methods to digiKam's Face recognizer, the results of performance tests of these methods on Extended Yale B dataset are indicated in the table below: | |||

{| class="wikitable sortable" | |||

|- | |||

! !! Closest Cosine distance !! Closest Euclidian distance !! SVM !! Machine learning KNN !! KD-Tree KNN | |||

|- | |||

| Accuracy (%) || 83.7179 || 83.7179 || 81.6667 || 82.6923 || 84.001 | |||

|- | |||

| Speed (ms/face)|| 550.321 || 469.642 || 79.9364 || 82.7103 || 121.379 | |||

|} | |||

The performance comparison above shows that among the implemented classification methods, K-Nearest neighbors give a better performance in both accuracy and rapidity. As explained in the previous section, the K-Nearest neighbors algorithm has a hyperparameters '''K''' to indicate the maximum number of neighbor nodes that can participate in the voting process. In order to choose the hyperparameter and to have a better understanding of the dispersion of face embedding, I tested K-Nearest Neighbors methods with several parameters '''K'''. The results of my tests are indicated in the table below: | |||

{| class="wikitable sortable" | |||

|- | |||

! Parameter K !! 1 !! 3 !! 5 !! 7 !! 9 !! 11 !! 13 !! 15 | |||

|- | |||

| Accuracy (%) || 82.3057 || 83.0052 || 82.8238 || 82.772 || 82.9275 || 82.8756 || 82.9016 || 82.487 | |||

|- | |||

| Speed (ms/face)|| 107.353 || 107.353 || 110.823 || 112.779 || 112.498 || 116.119 || 116.421 || 126.256 | |||

|} | |||

The results of these tests indicate that, in general, the processing speed increases with '''K''', but the accuracy is a little fluctuated. When '''K = 3''', the classifier gives the best results. However, this fluctuation indicates that the main reasons for classification errors are outliners and there are outliners across the dataset. Normally, a good face recognition model like OpenFace should be able to avoid this problem. I suspect that it could be a problem during the preprocessing step of digiKam's Face recognizer. Either way, this problem with outlining data has to be looked into detail in the next step of the project. | |||

======'''- Data storage for face classifier'''====== | |||

An 82 % accuracy and 112 ms/face on average is an acceptable performance for now. Therefore I decided to proceed with the implementation of data storage for Face recognizer. Up to this moment, the best classification methods are Machine learning K-Nearest Neighbors and KD-Tree K-Nearest Neighbors. | |||

From the perspective of machine memory, the one with Machine learning optimization only needs to load its trained classifier to work. On another hand, the one with the KD-Tree gives better accuracy but it has to keep all face vectors in memory in order to navigate. From the perspective of database storage, one of the advantages of machine learning is that they can perform online learning, in which, training data only need to be passed through the classifier only once and after that, only the label needs to be stored in the database. However, currently, the Machine learning of OpenCV doesn't support online learning yet. For KD-Tree, we have to either store the entire tree on memory during execution or perform K-Nearest search directly on the database. In order to perform K-Nearest search on the database, each entry has to be stored as a Tree node and the interfaces have to perform the search dynamically. | |||

Because of these reasons, we can either choose to re-train the machine learning models every time new data is registered, storing the KD-Tree or implement a spatial table for K-Nearest search on the database. For the next phase of GSoC 2020, I planned to complete and test these designs of Face recognizer. After that, I will fully integrate the new version of Face recognizer to the rest of the faces engine. Finally, some parallel processings have to be implemented in the Face engines to improve its speed. Furthermore, since the new version of the faces engine does not depend much on the rest of digiKam, it can be ported to a plug-in architecture. | |||

=== Coding period : Phase two (July 1 to July 27) === | |||

The main goal of this phase is to finish the database implementation for DNN face recognizer of digiKam's faces engine and to complete the integration of DNN face recognizer to the faces engine. | |||

===== June 30 to July 14 (Week 5 - 6) - Database storage for digiKam's faces engine ===== | |||

'''DONE''' | |||

* Plot UMAP dimensionally reduced face embedding | |||

* Implement Label database | |||

* Implement Spatial storage for K-Nearest search directly on the database | |||

* Implement face embedding database | |||

'''TODO''' | |||

* Fully integrate new improvements to the faces engine. | |||

* Apply parallel processing in faces recognizer | |||

* Port faces engine to plug-in architecture | |||

======'''- UMAP examination of faces embedding'''====== | |||

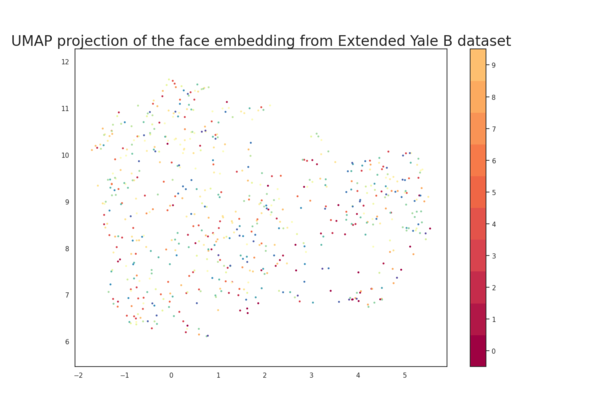

At the beginning of this second phase, I wished to have a better understanding of the distribution of face embeddings produced by OpenFace model. In order to do so, UMAP is a great tool to reduce high dimensional vectors into 2D for plotting. UMAP is a great algorithm that learns the internal structure of high dimensional data to regenerates low dimensional data. In order to have a better understanding of the products of OpenFace neural network, I plotted face embedding produced by this model by using UMAP. | |||

[[File:UMAP projection of faces embedding from Extended Yale B dataset.png|thumb|center|600px|'''UMAP projection of faces embedding from Extended Yale B dataset''']] | |||

[[File:Umap error.png|thumb|center|600px|'''UMAP projection of recognition errors''']] | |||

In the first figure, we have the plot of face embeddings from the entire extended Yale B dataset. Here we can see the face embedding regroup into different groups. However, there are several outliners that mix with one another. These are the faces that the faces engine fails to recognize. Here in the second figure, we have a better plot of these errors. Most of these errors come from images that are too dark or half-dark. Therefore, better preprocessing might help to avoid these errors. | |||

======'''- Face embedding storage'''====== | |||

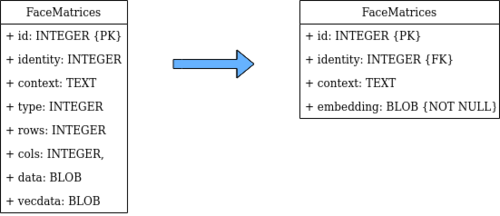

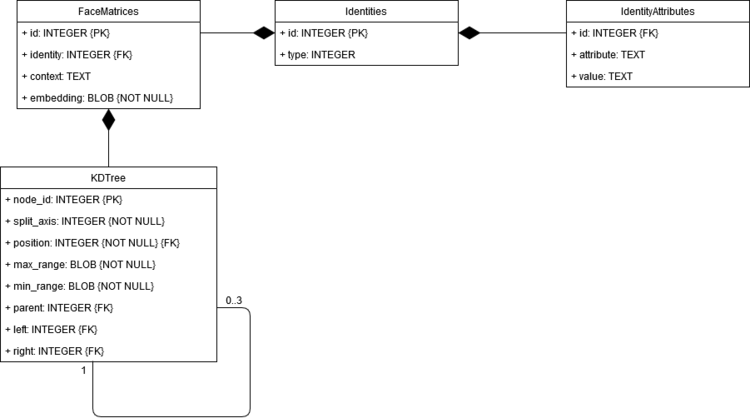

For labeling entities, DigiKam has already reserved a table named Identities. This table contains the ID of each person. These IDs are then referenced in IdentityAttributes table, which is a key-value table, contains attributes of identity. | |||

In the last versions of the faces engine, face embedding generated by the recognition algorithm is stored in a table named FaceMatrices. This table contained many attributes that became obsolete and unnecessary. Therefore, for the sake of this module, I decided to remove some unnecessary attributes, in order to simplify the storage model. | |||

[[File:Face embeding.png|500px|center|thumb|'''Changes in FaceMatrices database model''']] | |||

As stated in the figure above, the new version of FaceMatrices table only contains an artificial key to specify an entry, a reference to the identity of the face, the context of a registration, and the face embedding binary data itself. For now, the operations on this table are to save the face embedding extracted by the neural network, then use it to reconstruct the KD-Tree for KNN search, or re-train the OpenCV Machine learning model at the initiation of the Face classifier. | |||

The main advantage of this table is its simplicity, which leads to the rapidity and simplicity of the operations. Every operation costs only one database access. However, during the lifetime of the face recognizer, all face embeddings have to be stored in machine memory in order to accelerate the recognition process. | |||

======'''- Spatial table for K-Nearest search'''====== | |||

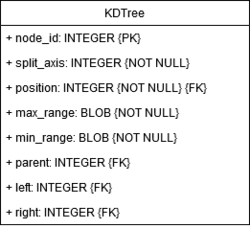

Another option for face embeddings storage is to store them in a spatial manner. Because the current version of SQL drivers supported by Qt doesn't implement spatial indexing, I implemented a database model inspired by KD-Tree structure that can perform k-nearest search. The database model is stated as below. | |||

[[File:Untitled Diagram-Page-2.png|250px|center|thumb|'''Database model of KD-Tree storage''']] | |||

An entry of this table is a node of a KD-Tree. By referencing other entries as sub-tree, we can implement a KD-Tree on the database. The '''INSERT''' operation performs a simple binary search to find the parent of the inserting node in order to modify its sub-tree references. The '''K-Nearest search''' performs a binary search directly on the database in the same manner as KD-Tree nearest search. In both operations, multiple queries are made and it takes O(1) space complexity and O(N) time complexity. | |||

The main advantage of this method is that it does not cost machine memory to perform prediction. However, it takes a little more storage space for the structure and a little more query time. The current performance of this recognition method is 83% accuracy with a speed of 300 ms/face on average. | |||

To sum up, the figure below specifies the UML diagram of FaceDb for DNN face recognizer: | |||

[[File:Untitled Diagram-Page-1.png|750px|center|thumb|'''Database diagram of DNN faces recognizer''']] | |||

===== July 15 to July 27 (Week 7 - 8) - Full integration of new Face recognizer to Faces engine ===== | |||

'''DONE''' | |||

* Adapt implementation of Face recognizer to faces engine's implementation | |||

* Reorganize Facial recognition interface of digiKam's faces engine | |||

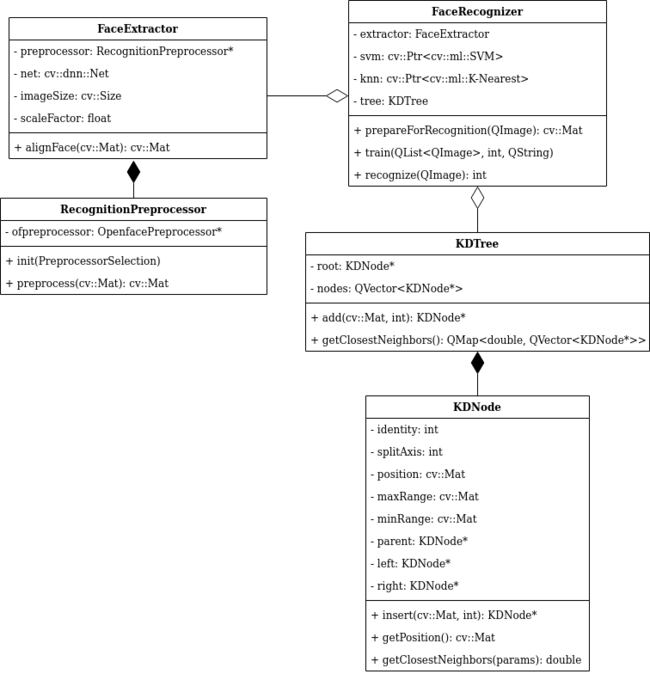

* Integrate databases for DNN face recognizer to digiKam's FaceDb | |||

* Code documentation | |||

'''TODO''' | |||

* Run more tests to thoroughly verify the new implementation | |||

* Apply parallel processing in faces recognizer | |||

* Improve implementation of faces engine | |||

* Improve preprocessing phase of facial recognition | |||

======'''- Integration of new DNN face recognizer to faces engine'''====== | |||

Up to this point, the new DNN face recognizer was nearly complete. The main task of this phase is to fully integrate the new face recognizer to the rest of digiKam's faces engine. The first thing that needed to be done is to update the database diagrams of Faces Database. For these changes to take effect, the Faces Database must be updated in order to trigger the Schema updater. The current version of Faces database is version 3, these changes in database structure make it to version 4. Furthermore, database interfaces for DNN face recognizer also needed to be rewritten. | |||

The class hierarchy of the new DNN Face recognizer is also simplified. The old hierarchy of facial recognition of faces engine composes of 3 main parts: the face database, the face recognizers, and the facial recognition wrapper. in this old implementation, classes are implemented around the face database and all the routines are wrapped up by the wrapper, is known as RecognitionDatabase. The name of this wrapper is misleading and needs to be changed. Every face image passed to the faces engine is passed through the preprocessing chain before being passed to the face recognizer. However, the preprocessing chain used the proper preprocessing method of the recognizer to prepare for the facial recognition process. Therefore, it would be better to implement this preprocessing chain inside the face recognizer to avoid ambiguity and unnecessary complications. Database access for face embedding also needs to be called inside the face recognizer during the training or recognizing routine. The interface with OpenCV Neural network is also integrated into the Face recognizer. | |||

[[File:Facerecognizer.png|650px|thumb|center|'''UML diagram of DNN face recognizer''']] | |||

======'''- Reorganization of facial recognition interfaces'''====== | |||

As mentioned in the previous section, the implementation of the interfaces to facial recognition tasks of digiKam's faces engine is misleading and over-complicated. Therefore it needs to be renamed and simplified. This wrapper is then renamed as '''FacialRecognitionWrapper'''. The wrapper still keeps its three main features: | |||

* Identities management: to add and manage identities and their attributes, | |||

* Face recognizer training: to train the recognizers and add face embedding to the database for future usages, | |||

* Facial recognition: to recognize a face and return its identity as well as the attributes. | |||

This class is simplified by removing some overhead functions and encapsulating recognizers' tasks in their own classes. At the end of this second phase, the new DNN face recognizer has been integrated with digiKam's faces engine. I have noticed that there are some points that can be simplified and improved. Therefore, in the next phase of GSoC 2020, the main goals are to clean the code base of the faces engine and migrate it to the DPlugin architecture, then we can focus on accelerating the processing phases of facial recognition and face detector. | |||

=== Coding period : Phase three (July 29 to August 24) === | |||

In the final phase of GSoC 2020, my main goal is to check and finalize the new implementation of digiKam's faces engine. Some optimizations are made and the recognition processing time is reduced from 64 ms/face to 20ms/face. | |||

===== July 29 to August 10 (Week 9 - 10) - Debug recognition error dues to QImage ===== | |||

'''DONE''' | |||

* Correct DNN recognition preprocessing | |||

* Use pointers to pass QImages around Face recognizer | |||

* Experiment on other DNN Face detection models | |||

'''TODO''' | |||

* Apply parallel processing in faces recognizer | |||

======'''- Recognition error dues to QImage copy constructor'''====== | |||

After integrating the new version of DNN Face recognizer into digiKam and running further tests, the accuracy of DNN recognizer reduced to 74%, 10% lower than the former tests. This chute in the accuracy is due to the differences between the workflows of the unit test and digiKam's facial recognition workflow. | |||

After further investigations, the cause of the recognition error comes from the copy constructor of QImage, which is used in the preprocessing function of the DNN face recognizer. The role of this function is to transform the cropped face image from QImage to cv::Mat. Due to some unknown error in the implicit sharing mechanism of QImage copy constructor, the preprocessed cv::Mat is disturbed and leads to the error in the recognition process. | |||

To resolve this issue, I decided to pass QImage* instead of QImage as arguments to the DNN Face recognizer. This solution can avoid the copy constructor of QImage and it can avoid further duplication of the images. In addition to this change, the preprocessing chain of DNN faces recognizer is also changes to become as close as possible to OpenFace's preprocessing method, by convert all image to ARGB32 Premultiplied format. | |||

======'''- Face detection with other versions of YOLO and SSD'''====== | |||

As mentioned at the beginning of this report, the current problem of the Face detection module of digiKam's faces engine is that SSD is much faster than YOLOv3 but it misses faces that are relatively small. In this final phase of GSoC 2020, I tried out other versions of these 2 detection algorithms with the hope to achieve a better performance. | |||

Firstly, during the development of this project, YOLO had released 2 new versions: YOLOv4 and YOLOv5 with better performance. After reading the performance reports of these new versions of YOLO, I found that YOLOv4 is generally better than YOLOv5. Therefore, I decided to try to use YOLOv4 on faces engine. The implementation of a face detector based on YOLOv4 is not different from the one that based on YOLOv3, we only need to change only network deployment and weight data files. | |||

However, YOLOv4 is faster than YOLOv3 only on GPU, not on CPU. At the time of this project developed, OpenCV only supported NVIDIA CUDA driver support for their DNN module. With this inconvenience, this new version of YOLO is still not fit to improve the processing speed of face detection in digiKam. | |||

After tried out YOLO, I continued to try out the latest version of SSD-Mobilenet. With a slide change in input processing, this new version gives better performance on object detection. However, this version doesn't support face detection. The latest version of the SSD face detection network is the version used by digiKam. In conclusion, at the moment, there still is not any solution better than the current version of DNN face detection of digiKam. | |||

===== August 11 to August 24 (Week 11 - 12) - Apply multi-thread processing on faces engine, recognition speed reduces to 20 ms/face ===== | |||

'''DONE''' | |||

* Use mutexes to secure critical section of faces engine | |||

* Apply OpenCV parallel_for_() function for multi-threading | |||

====== '''- Secure critical section of faces engine''' ====== | |||

After ensuring the integration of the new DNN face recognizer, I decided to improve its processing speed by apply multi-threading processing. To accomplish this task, the first thing to do is to find the critical sections of this module and secure it with mutex protection. | |||

After investigating the code of faces engine, I found that the only critical section of DNN face detection is the OpenCV neural network, and the critical sections of DNN face recognizer are Red-eye face aligner, the OpenCV neural network, and KD-Tree insertion. After securing these code sections with mutex we can proceed to run facial recognition on multiple threads. | |||

====== '''- Parallel processing with the help of OpenCV''' ====== | |||

In faces engine, face detection and facial recognition are both mapping operations. For face detection, the operation maps an image with a list of bounding boxes of faces. For facial recognition, it's a map between a specific face with a corresponding label. Thanks to this nature of both operations, we can parallelize face detection and facial recognition by using QtConcurrentMap or OpenCV's parallel_for(). | |||

After testing these two parallelization methods, the results given by OpenCV's parallel_for() is better and more adaptive than QtConcurrentMap. This parallelization brings the recognition speed on ExtendedYaleB dataset from 64 ms/face to 34 ms/face. Since the OpenFace model is lightweight, I decided to use an array of 10 Face Extractors to accelerate the recognition speed in batch processing. This implementation brings the speed from 34 ms/face to 16 ms/face. | |||

However, multi-threading processing does not help face detection but slows it down. The main reason for this problem is because most of the processing time of detection is occupied by the neural network, which is its critical section. Therefore multi-threading does not help to improve the speed but the mutex handling takes it longer to go through a batch. | |||

== Final result == | |||

As the final result, the current state of digiKam's faces engine can be summarized as follow. KD-Tree is used as the main classifier of DNN face recognizer. The FaceMatrices table of FaceDb is also renewed with a simpler model. The spatial database for a direct recognition on the database is prepared but its performance is not as expected. The DNN face detectors and DNN face recognizers are made to be thread-safe and DNN face recognizer can train and recognize a batch of face images in parallel. These changes bring an improvement of about 84% accuracy with a speed of about 19 ms/face. | |||

At the end of this project, the following bugs are supposed to be fixed: | |||

https://bugs.kde.org/show_bug.cgi?id=415895 | |||

https://bugs.kde.org/show_bug.cgi?id=416630 | |||

https://bugs.kde.org/show_bug.cgi?id=415782 | |||

https://bugs.kde.org/show_bug.cgi?id=415895 | |||

== Future work == | |||

For future development, there are still many things to improve on digiKam faces engine, for example: | |||

* We can continue to try to convert this module to be based entirely on OpenCV library to avoid some error during the conversion between QImage and cv::Mat. | |||

* After the merge with face management workflow, we can export this module to DPlugin architecture to be loaded dynamically by the application. | |||

* OpenFace model still shows some defects on face embedding extraction, the original model of OpenFace, FaceNet would be a good lead to improve the performance of the face recognizer. | |||

Latest revision as of 15:40, 8 July 2021

Digikam : DNN based Faces Recognition Improvements

DigiKam is a famous open-source photo management software. With a huge effort, the developers of digiKam have implemented face detection and facial recognition features in a module called faces engine. This module implements different methods to scan faces and then label them based on the pre-tagged photos given by users.

Since last year, as a result of Thanh Trung Dinh's project during GSoC 2019, digiKam's faces engine has adopted new CNN based face processing methods. These methods have been proven to give a better performance than other traditional image processing methods implemented in digiKam. However, there still are some limitations in the current implementation of the faces engine, therefore the main goals of this project to continue Thanh Trung Dinh's works and improve the performance of digiKam's faces engine.

Mentors : Gilles Caulier, Maik Qualmann, Thanh Trung Dinh

Important Links

Project Proposal

Digikam DNN based Faces Recognition Improvements

GitLab development branch

gsoc20-facesengine-recognition

Contacts

Email: [email protected]

Github: MinhNghiaD

LinkedIn: https://www.linkedin.com/in/nghia-duong-2b5bbb15a/

Project Goals

The current goals of this project are to :

- Improve the accuracy of faces classifier

- Optimize the use of memory of faces engine

- Decrease storage space of faces engine

- Improve processing speed

- Re-structure faces engine architecture

- Port faces engines to Plugin architecture

Project Report

Community Bonding period (May 1 to May 31)

During this period, my main objective was to familiarize myself with the work of Thanh Trung Dinh, in order to evaluate the current implementation. After going through Thanh Trung Dinh's codes and final report, I have a better understanding of the current implementation of digiKam's faces engine. Generally, the architecture of DNN faces engine can be divided into 3 main parts:

- Face detector is in charge with face detection. This module gives users the option to choose between 2 prominent face detection algorithms: YOLOv3 and SSD-MobileNet. The faces detected shall be cropped then passed to Face recognizer.

- Face recognizer is in charge with face recognition process. It receives cropped face from the Face detector and applies face alignment then passed the preprocessed face image through the neural network. After GSoC 2019, the CNN algorithm used by digiKam is OpenFacev1 - an implementation of [FaceNet paper].

- Face database is in charge of database operations for the storage of functional data of digiKam's faces engine. This is the link between faces engine with digiKam application.

According to bug reports of digiKam's faces engine, the implementation of this module remains some problems that need to be addressed. The main problem reported in several bugs is that the performance of the faces engine decreases with the expansion of the data set. Therefore, for the rest of this period, I aimed to revaluates the exact state of different components of the faces engine. Because the 3 parts of the DNN version of faces engine are fully integrated into digiKam, it is difficult to evaluate the performance of each part without being added up more biases. In order to benchmark each component of the faces engine correctly, I created replicates of digiKam's Face detector and Face recognizer as stand-alone modules that apply the previously implemented DNN algorithms to solve their problems, without any link to digiKam database or digiKam core library. After that, I finally programmed the first sketch of 3 unit tests for Face detector and Face recognizer.

Here is my plan for the first 2 weeks of the coding period is to:

- Complete the unit tests for Face detector and Face recognizer.

- Search for the problems that cause the decrease of performance.

- Try out different methods for face classification.

- Compare the performances of these different techniques.

Coding period : Phase one (June 1 to June 29)

In this phase, my work mostly concentrated on building the unit tests and applying different classifier methods for Face recognizer. Throughout these tests, points that need to be improved were revealed, so as to improve a better and faster face recognition module.

June 1 to June 14 (Week 1 - 2) - Report of current state of digiKam's faces engine

DONE

- Unit test with GUI for Face Detector (YOLOv3 and SSD-MobileNet).

- Comparison of performance of YOLOv3 and SSD-MobileNet implementation in digiKam's faces engine.

- Unit test with GUI for Face Recognizer (OpenFacev1).

- Automatic unit test on large datasets to evaluate the performance of Face Recognizer.

- Evaluation of current recognition methods used by the faces engine.

TODO

- Add and test new recognition methods on Face recognizer in order to improve accuracy and processing speed.

- Compare the performances of these different methods.

- Debug detection errors of SSD-MobileNet.

- Speed up YOLOv3 processing time by using calculation distribution.

During these first weeks of GSoC 2020, I finalized my unit tests for 2 essential components of faces engine: Face detector and Face recognizer. The purpose of these tests is to understand the internal work of faces engine and the reasons for the degradation over time reported in bug reports.

- To verify the functionalities of Face detector, I built a test with GUI, in order to show the image matrices after each step of the face detection process. In this way, we can have a sense of what it is doing and then evaluate its performance.

- To verify the functionalities of Face recognizer, I built 2 tests. A test with GUI to reproduce the face suggesting process. Another test receives a dataset as arguments and split it into a training set and a test set then passes them to the Face recognizer, in order to evaluate its performance.

- Face Detector status

Currently, digiKam's faces engine employs 2 different CNN algorithms for face detection. One is YOLOv3 and the other is SSD-MobileNet. The performances of the implementation of these 2 algorithms are slightly different in digiKam. For each image, YOLOv3 scans 10600 bounding boxes and therefore it gives very high accuracy, but it takes about 400 ms on average, on each image. On another hand, SSD-MobileNet scans only 20 boxes for about 20 ms on each image and it gives a lower accuracy. The default method used by the faces engine is SSD-MobileNet, because of its lightweight and rapidity.

|

|

Although the implementation of SSD-MobileNet performs rather well on average use cases, where all faces are clear and can be easily detected, it still has some limitations that need to be addressed. In the example above, I performed face detection using YOLOv3 and SSD-MobileNet on the same image. In the figure on the left-hand side, the Face detector powered by YOLOv3 can detect most of the faces in the image. However, in the figure on the right-hand side, the Face detector powered by SSD-MobileNet cannot detect any face. Unfortunately, this problem with SSD-MobileNet constantly occurs in several images, usually in cases where the faces are small or the images are too dark. The cause of this low accuracy could be an error in the implementation of SSD-MobileNet in digiKam or an error in the neural network files. Either way, this problem needs to be correct in order to improve the performance of the Face detector.

However, the main scope of this project focuses to improve the Face Recognizer of digiKam. Therefore the works on the Face detector will be postponed to the end of the project.

- Face Recognizer status

After being detected by the Face detector, the face parts of the images are cropped and passed to Face Recognizer. Here, the face image passed through several steps to be recognized. First, the face image is transformed into Cv::Mat, and then scaled into a static ratio defined by Face recognizer. After that, the face is aligned based on the position of eyes, nose, and lip, before being passed through the Neural Network to output a 128-dimensional vector called face embedding. Finally, the output face embedding is compared with registered faces to predict the corresponding identity.

The current classifying method used by digiKam based on cosine distance of face embeddings. The greater the cosine of the angle between 2 vectors, the more similar 2 faces are. In order to predict the label of a face, digiKam's Face recognizer calculates the mean of cosine distance of a face to pre-registered face embeddings of each group of labels. The Face recognizer then picks the highest mean distance that greater a certain threshold as its prediction.

To examine the result of each step, I implemented a unit test with GUI as an extension of the unit test for the Face detector. This test displayed the transformation of face images through the recognition process, and it includes a simple control panel for testers to perform a simple recognition work-flow. This test gives an intuition of what the Recognizer is doing and therefore facilitates debugging processes.

In addition to this test, I implemented a performance test for Face recognizer. This performance test receives a face dataset and a train/test ratio as inputs. The test splits the dataset after the split ratio, the training set will be registered with its labels by the Face recognizer, and the test set will be used to perform the verification of the facial recognition process. The splitting step is completely random to ensure the integrity of the test. With the help of this test, I can evaluate the correct performance (accuracy and speed) of digiKam's Face recognizer.

At first, I applied the performance test on the Yalefaces dataset, which contains 166 pre-labeled face images. On this small dataset, the performance of the mean cosine distance method is rather well, 88.8889 % accuracy at speed 75.3333 ms/face, with threshold 0.7 on a total of 121 training faces, and 45 test faces. However, when I perform the same test on Extended Yale B data set, which contains 16380 pre-labeled face images, the accuracy shrank to 0 %. To be specific, the accuracy of the mean cosine distance method is O % accuracy at speed 626.485 ms/face, with threshold 0.7 on a total of 11469 training faces and 4911 test faces. The main reason for this poor accuracy is because it fails to recognized face due to a small mean cosine distance. This problem is the same problem that appeared in several bug reports.

This degradation of Face recognizer dues to the lack of adaptivity of the mean cosine distance method to a big dataset. Because of the nature of this method, when the data related to an entity becomes greater, the dispersion of data makes the mean distance smaller. The calculation of mean cosine distance is exhaustive, its time complexity increases linearly with the size of the data. Therefore, the more data it gets, the poorer performance it is. Furthermore, due to the mathematical nature of the cosine function, the partition capacity of this method is limited. In general, data classification is to find a way to partition data into different groups. Because cos(x) : [0°, 180°] --> [-1,1] is injective, the vectors limited by a cone of 30° is partitioned into the same group, even with a high threshold of 0.86. Therefore, the more labels we have, the more collisions occur between these data partitions.

June 15 to June 29 (Week 3 - 4) - 84% accuracy and 104.804 ms/face speed on the Extended Yale B dataset

DONE

- Apply Machine Learning classifiers on Face recognizer.

- Accuracy improvement from 0% to 84% on the Extended Yale B dataset.

- Processing speed improvement from 671.449 ms/face to 104.804 ms/face on the Extended Yale B dataset.

- High dimensional data partitioning with KD-Tree.

- Implementation of online learning in Face recognizer.

- First sketch of database model for Face recognizer.

TODO

- Fully integrate new improvements to the faces engine.

- Reorganize the databases of the faces engine.

- Re-verify face aligning process to avoid outlining face embeddings.

- Apply map-reduce to distribute the calculations on multiple threads.

- Port faces engine to plug-in architecture

- Test UMAP Dimensionality reduction algorithm to have an insight into the global structure of face embedding.

As stated in the previous section, the mean cosine distance is not fit for a face classifier. Therefore during these 2 weeks, I focused on implementing new classification methods and compared their performances.

- New face classifiers

OpenFace trained their convolutional neural network by optimizing the triplet loss of Euclidian distances between face embeddings. This optimization ensures face embeddings belong to the same person have a close distance, and in contrast in the case of 2 different persons. Because of this property, I tried several classification methods that can distinguish vector representations. Here is the list of classification methods that I have tried during this period:

- Closest Cosine distance,

- Closest Euclidian distance,

- Support vector machine with linear kernel,

- Machine Learning K-Nearest neighbors,

- Traditional K-Nearest neighbors with KD-Tree.

After several tests, the Machine K-Nearest neighbors method appears to have superior performance than other methods. However, this method is based on statistics and therefore can have some biases. Therefore I want to try out the traditional K-Nearest neighbors with vote counting mechanism. With the help of KD-Tree, a binary search tree that can partition high dimensional vectors, the search for K-Nearest nodes to a given position becomes more efficient, with the time complexity of O(log(n)).

- Performances comparison

After integrated news face classifier methods to digiKam's Face recognizer, the results of performance tests of these methods on Extended Yale B dataset are indicated in the table below:

| Closest Cosine distance | Closest Euclidian distance | SVM | Machine learning KNN | KD-Tree KNN | |

|---|---|---|---|---|---|

| Accuracy (%) | 83.7179 | 83.7179 | 81.6667 | 82.6923 | 84.001 |

| Speed (ms/face) | 550.321 | 469.642 | 79.9364 | 82.7103 | 121.379 |

The performance comparison above shows that among the implemented classification methods, K-Nearest neighbors give a better performance in both accuracy and rapidity. As explained in the previous section, the K-Nearest neighbors algorithm has a hyperparameters K to indicate the maximum number of neighbor nodes that can participate in the voting process. In order to choose the hyperparameter and to have a better understanding of the dispersion of face embedding, I tested K-Nearest Neighbors methods with several parameters K. The results of my tests are indicated in the table below:

| Parameter K | 1 | 3 | 5 | 7 | 9 | 11 | 13 | 15 |

|---|---|---|---|---|---|---|---|---|

| Accuracy (%) | 82.3057 | 83.0052 | 82.8238 | 82.772 | 82.9275 | 82.8756 | 82.9016 | 82.487 |

| Speed (ms/face) | 107.353 | 107.353 | 110.823 | 112.779 | 112.498 | 116.119 | 116.421 | 126.256 |

The results of these tests indicate that, in general, the processing speed increases with K, but the accuracy is a little fluctuated. When K = 3, the classifier gives the best results. However, this fluctuation indicates that the main reasons for classification errors are outliners and there are outliners across the dataset. Normally, a good face recognition model like OpenFace should be able to avoid this problem. I suspect that it could be a problem during the preprocessing step of digiKam's Face recognizer. Either way, this problem with outlining data has to be looked into detail in the next step of the project.

- Data storage for face classifier

An 82 % accuracy and 112 ms/face on average is an acceptable performance for now. Therefore I decided to proceed with the implementation of data storage for Face recognizer. Up to this moment, the best classification methods are Machine learning K-Nearest Neighbors and KD-Tree K-Nearest Neighbors.

From the perspective of machine memory, the one with Machine learning optimization only needs to load its trained classifier to work. On another hand, the one with the KD-Tree gives better accuracy but it has to keep all face vectors in memory in order to navigate. From the perspective of database storage, one of the advantages of machine learning is that they can perform online learning, in which, training data only need to be passed through the classifier only once and after that, only the label needs to be stored in the database. However, currently, the Machine learning of OpenCV doesn't support online learning yet. For KD-Tree, we have to either store the entire tree on memory during execution or perform K-Nearest search directly on the database. In order to perform K-Nearest search on the database, each entry has to be stored as a Tree node and the interfaces have to perform the search dynamically.

Because of these reasons, we can either choose to re-train the machine learning models every time new data is registered, storing the KD-Tree or implement a spatial table for K-Nearest search on the database. For the next phase of GSoC 2020, I planned to complete and test these designs of Face recognizer. After that, I will fully integrate the new version of Face recognizer to the rest of the faces engine. Finally, some parallel processings have to be implemented in the Face engines to improve its speed. Furthermore, since the new version of the faces engine does not depend much on the rest of digiKam, it can be ported to a plug-in architecture.

Coding period : Phase two (July 1 to July 27)

The main goal of this phase is to finish the database implementation for DNN face recognizer of digiKam's faces engine and to complete the integration of DNN face recognizer to the faces engine.

June 30 to July 14 (Week 5 - 6) - Database storage for digiKam's faces engine

DONE

- Plot UMAP dimensionally reduced face embedding

- Implement Label database

- Implement Spatial storage for K-Nearest search directly on the database

- Implement face embedding database

TODO

- Fully integrate new improvements to the faces engine.

- Apply parallel processing in faces recognizer

- Port faces engine to plug-in architecture

- UMAP examination of faces embedding

At the beginning of this second phase, I wished to have a better understanding of the distribution of face embeddings produced by OpenFace model. In order to do so, UMAP is a great tool to reduce high dimensional vectors into 2D for plotting. UMAP is a great algorithm that learns the internal structure of high dimensional data to regenerates low dimensional data. In order to have a better understanding of the products of OpenFace neural network, I plotted face embedding produced by this model by using UMAP.

In the first figure, we have the plot of face embeddings from the entire extended Yale B dataset. Here we can see the face embedding regroup into different groups. However, there are several outliners that mix with one another. These are the faces that the faces engine fails to recognize. Here in the second figure, we have a better plot of these errors. Most of these errors come from images that are too dark or half-dark. Therefore, better preprocessing might help to avoid these errors.

- Face embedding storage

For labeling entities, DigiKam has already reserved a table named Identities. This table contains the ID of each person. These IDs are then referenced in IdentityAttributes table, which is a key-value table, contains attributes of identity.

In the last versions of the faces engine, face embedding generated by the recognition algorithm is stored in a table named FaceMatrices. This table contained many attributes that became obsolete and unnecessary. Therefore, for the sake of this module, I decided to remove some unnecessary attributes, in order to simplify the storage model.

As stated in the figure above, the new version of FaceMatrices table only contains an artificial key to specify an entry, a reference to the identity of the face, the context of a registration, and the face embedding binary data itself. For now, the operations on this table are to save the face embedding extracted by the neural network, then use it to reconstruct the KD-Tree for KNN search, or re-train the OpenCV Machine learning model at the initiation of the Face classifier.

The main advantage of this table is its simplicity, which leads to the rapidity and simplicity of the operations. Every operation costs only one database access. However, during the lifetime of the face recognizer, all face embeddings have to be stored in machine memory in order to accelerate the recognition process.

- Spatial table for K-Nearest search

Another option for face embeddings storage is to store them in a spatial manner. Because the current version of SQL drivers supported by Qt doesn't implement spatial indexing, I implemented a database model inspired by KD-Tree structure that can perform k-nearest search. The database model is stated as below.

An entry of this table is a node of a KD-Tree. By referencing other entries as sub-tree, we can implement a KD-Tree on the database. The INSERT operation performs a simple binary search to find the parent of the inserting node in order to modify its sub-tree references. The K-Nearest search performs a binary search directly on the database in the same manner as KD-Tree nearest search. In both operations, multiple queries are made and it takes O(1) space complexity and O(N) time complexity.

The main advantage of this method is that it does not cost machine memory to perform prediction. However, it takes a little more storage space for the structure and a little more query time. The current performance of this recognition method is 83% accuracy with a speed of 300 ms/face on average.

To sum up, the figure below specifies the UML diagram of FaceDb for DNN face recognizer:

July 15 to July 27 (Week 7 - 8) - Full integration of new Face recognizer to Faces engine

DONE

- Adapt implementation of Face recognizer to faces engine's implementation

- Reorganize Facial recognition interface of digiKam's faces engine

- Integrate databases for DNN face recognizer to digiKam's FaceDb

- Code documentation

TODO

- Run more tests to thoroughly verify the new implementation

- Apply parallel processing in faces recognizer

- Improve implementation of faces engine

- Improve preprocessing phase of facial recognition

- Integration of new DNN face recognizer to faces engine

Up to this point, the new DNN face recognizer was nearly complete. The main task of this phase is to fully integrate the new face recognizer to the rest of digiKam's faces engine. The first thing that needed to be done is to update the database diagrams of Faces Database. For these changes to take effect, the Faces Database must be updated in order to trigger the Schema updater. The current version of Faces database is version 3, these changes in database structure make it to version 4. Furthermore, database interfaces for DNN face recognizer also needed to be rewritten.

The class hierarchy of the new DNN Face recognizer is also simplified. The old hierarchy of facial recognition of faces engine composes of 3 main parts: the face database, the face recognizers, and the facial recognition wrapper. in this old implementation, classes are implemented around the face database and all the routines are wrapped up by the wrapper, is known as RecognitionDatabase. The name of this wrapper is misleading and needs to be changed. Every face image passed to the faces engine is passed through the preprocessing chain before being passed to the face recognizer. However, the preprocessing chain used the proper preprocessing method of the recognizer to prepare for the facial recognition process. Therefore, it would be better to implement this preprocessing chain inside the face recognizer to avoid ambiguity and unnecessary complications. Database access for face embedding also needs to be called inside the face recognizer during the training or recognizing routine. The interface with OpenCV Neural network is also integrated into the Face recognizer.

- Reorganization of facial recognition interfaces

As mentioned in the previous section, the implementation of the interfaces to facial recognition tasks of digiKam's faces engine is misleading and over-complicated. Therefore it needs to be renamed and simplified. This wrapper is then renamed as FacialRecognitionWrapper. The wrapper still keeps its three main features:

- Identities management: to add and manage identities and their attributes,

- Face recognizer training: to train the recognizers and add face embedding to the database for future usages,

- Facial recognition: to recognize a face and return its identity as well as the attributes.

This class is simplified by removing some overhead functions and encapsulating recognizers' tasks in their own classes. At the end of this second phase, the new DNN face recognizer has been integrated with digiKam's faces engine. I have noticed that there are some points that can be simplified and improved. Therefore, in the next phase of GSoC 2020, the main goals are to clean the code base of the faces engine and migrate it to the DPlugin architecture, then we can focus on accelerating the processing phases of facial recognition and face detector.

Coding period : Phase three (July 29 to August 24)

In the final phase of GSoC 2020, my main goal is to check and finalize the new implementation of digiKam's faces engine. Some optimizations are made and the recognition processing time is reduced from 64 ms/face to 20ms/face.

July 29 to August 10 (Week 9 - 10) - Debug recognition error dues to QImage

DONE

- Correct DNN recognition preprocessing

- Use pointers to pass QImages around Face recognizer

- Experiment on other DNN Face detection models

TODO

- Apply parallel processing in faces recognizer

- Recognition error dues to QImage copy constructor

After integrating the new version of DNN Face recognizer into digiKam and running further tests, the accuracy of DNN recognizer reduced to 74%, 10% lower than the former tests. This chute in the accuracy is due to the differences between the workflows of the unit test and digiKam's facial recognition workflow.

After further investigations, the cause of the recognition error comes from the copy constructor of QImage, which is used in the preprocessing function of the DNN face recognizer. The role of this function is to transform the cropped face image from QImage to cv::Mat. Due to some unknown error in the implicit sharing mechanism of QImage copy constructor, the preprocessed cv::Mat is disturbed and leads to the error in the recognition process.

To resolve this issue, I decided to pass QImage* instead of QImage as arguments to the DNN Face recognizer. This solution can avoid the copy constructor of QImage and it can avoid further duplication of the images. In addition to this change, the preprocessing chain of DNN faces recognizer is also changes to become as close as possible to OpenFace's preprocessing method, by convert all image to ARGB32 Premultiplied format.

- Face detection with other versions of YOLO and SSD

As mentioned at the beginning of this report, the current problem of the Face detection module of digiKam's faces engine is that SSD is much faster than YOLOv3 but it misses faces that are relatively small. In this final phase of GSoC 2020, I tried out other versions of these 2 detection algorithms with the hope to achieve a better performance.

Firstly, during the development of this project, YOLO had released 2 new versions: YOLOv4 and YOLOv5 with better performance. After reading the performance reports of these new versions of YOLO, I found that YOLOv4 is generally better than YOLOv5. Therefore, I decided to try to use YOLOv4 on faces engine. The implementation of a face detector based on YOLOv4 is not different from the one that based on YOLOv3, we only need to change only network deployment and weight data files.

However, YOLOv4 is faster than YOLOv3 only on GPU, not on CPU. At the time of this project developed, OpenCV only supported NVIDIA CUDA driver support for their DNN module. With this inconvenience, this new version of YOLO is still not fit to improve the processing speed of face detection in digiKam.

After tried out YOLO, I continued to try out the latest version of SSD-Mobilenet. With a slide change in input processing, this new version gives better performance on object detection. However, this version doesn't support face detection. The latest version of the SSD face detection network is the version used by digiKam. In conclusion, at the moment, there still is not any solution better than the current version of DNN face detection of digiKam.

August 11 to August 24 (Week 11 - 12) - Apply multi-thread processing on faces engine, recognition speed reduces to 20 ms/face

DONE

- Use mutexes to secure critical section of faces engine

- Apply OpenCV parallel_for_() function for multi-threading

- Secure critical section of faces engine

After ensuring the integration of the new DNN face recognizer, I decided to improve its processing speed by apply multi-threading processing. To accomplish this task, the first thing to do is to find the critical sections of this module and secure it with mutex protection.

After investigating the code of faces engine, I found that the only critical section of DNN face detection is the OpenCV neural network, and the critical sections of DNN face recognizer are Red-eye face aligner, the OpenCV neural network, and KD-Tree insertion. After securing these code sections with mutex we can proceed to run facial recognition on multiple threads.

- Parallel processing with the help of OpenCV

In faces engine, face detection and facial recognition are both mapping operations. For face detection, the operation maps an image with a list of bounding boxes of faces. For facial recognition, it's a map between a specific face with a corresponding label. Thanks to this nature of both operations, we can parallelize face detection and facial recognition by using QtConcurrentMap or OpenCV's parallel_for().

After testing these two parallelization methods, the results given by OpenCV's parallel_for() is better and more adaptive than QtConcurrentMap. This parallelization brings the recognition speed on ExtendedYaleB dataset from 64 ms/face to 34 ms/face. Since the OpenFace model is lightweight, I decided to use an array of 10 Face Extractors to accelerate the recognition speed in batch processing. This implementation brings the speed from 34 ms/face to 16 ms/face.

However, multi-threading processing does not help face detection but slows it down. The main reason for this problem is because most of the processing time of detection is occupied by the neural network, which is its critical section. Therefore multi-threading does not help to improve the speed but the mutex handling takes it longer to go through a batch.

Final result

As the final result, the current state of digiKam's faces engine can be summarized as follow. KD-Tree is used as the main classifier of DNN face recognizer. The FaceMatrices table of FaceDb is also renewed with a simpler model. The spatial database for a direct recognition on the database is prepared but its performance is not as expected. The DNN face detectors and DNN face recognizers are made to be thread-safe and DNN face recognizer can train and recognize a batch of face images in parallel. These changes bring an improvement of about 84% accuracy with a speed of about 19 ms/face.

At the end of this project, the following bugs are supposed to be fixed:

https://bugs.kde.org/show_bug.cgi?id=415895 https://bugs.kde.org/show_bug.cgi?id=416630 https://bugs.kde.org/show_bug.cgi?id=415782 https://bugs.kde.org/show_bug.cgi?id=415895

Future work

For future development, there are still many things to improve on digiKam faces engine, for example:

- We can continue to try to convert this module to be based entirely on OpenCV library to avoid some error during the conversion between QImage and cv::Mat.

- After the merge with face management workflow, we can export this module to DPlugin architecture to be loaded dynamically by the application.

- OpenFace model still shows some defects on face embedding extraction, the original model of OpenFace, FaceNet would be a good lead to improve the performance of the face recognizer.